work

Working on a project is not only a process for me to learn more about myself but also a way for me to learn about the world. Here are some main projects I've done in the past three years:

Clarity

Purpose: Explore tools for thought built around the belief that thoughts are non-linear, interwoven, and best navigated with extensive keyboard shortcuts support.

Work: Designed the system and interactions.

Result: to be decided.

Quick fly-through of navigating and connecting thoughts with the keyboard.

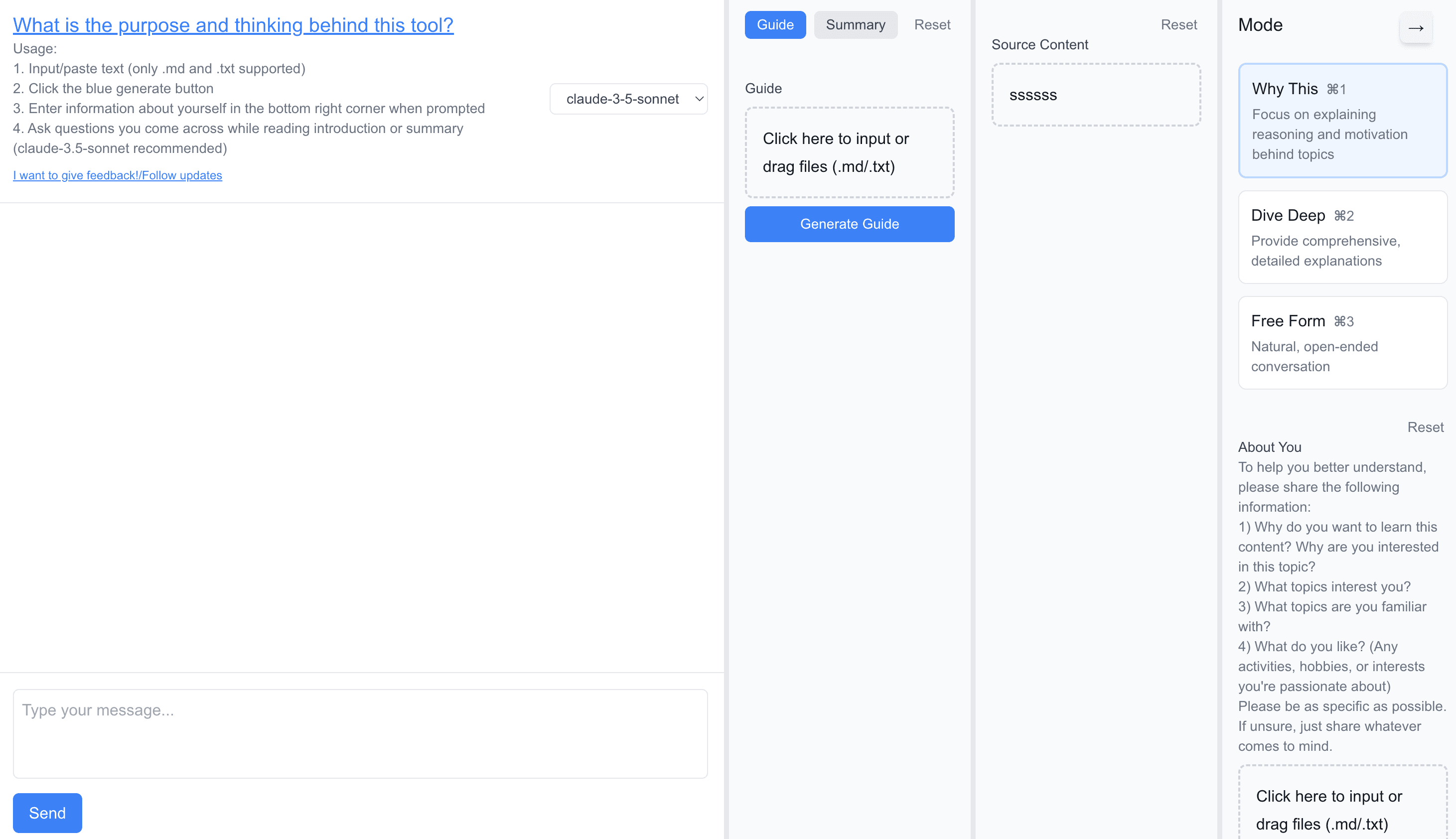

Clarity Reads

Purpose: Help myself read history books and other long, dense texts more easily

Work: Vibe-coded everything so Claude can reshape chapters into something navigable without losing the details.

Result: to be decided.

Threads of historical events and the relationship among people in the chapter

Draw to Create AI

Purpose: Built this for my younger self because I love to imagine stickmans fighting, and I'd absolutely love this if i'm a kid again.

Work: Built iOS application with AI integration for creative drawing tools.

Result: Available on the App Store.

Modern soliders fighting medieval wizards

noey.ai

Purpose: To build a fastest and cheapest way for people to build their personal website.

Work: Collaborated with Enrico. I handled 100% of the technical work. Built a full-stack application; built a RAG system for style guide; implemented a payment system with LemonSqueezy; implemented data tracking system with Amplitude; implemented a double-click to edit & drag-and-drop to change image, online website editing feature; implemented a tenant domain system, one for displaying user-created sites, one for main app.

Result: We launched, got 43 users and realized that the giants in the website creating field is already moving into this AI-assisted area quickly, and that focusing on personal website only (where the giants overlooked) isn't interesting enough so we stopped working on this.

Demo video of website builder in action

Two Lambda Persona Agent

Purpose: To build fully autonomous agentic influencer that dominates social media communities at scale.

Work: I worked with Leo on AI Engineering, did lots of experiments on giving AI tools, personality steering, context engineering. An example will be to letting Claude Code generate motion videos using Remotion and math/science videos using Maniam.

Result: Still working on this!

Paper Visualizer

Purpose: Help researchers quickly understand academic papers through visual flowcharts of research ideas and methodologies.

Idea: Reading literature reviews is time-consuming and researchers need faster ways to grasp paper concepts and avoid redundant research - visual flowcharts could accelerate academic comprehension.

Work: Interviews 50+ researchers and students to understand their needs and pain points. Built web application with Next.js, Tailwind CSS, Supabase, and major LLM APIs. Launch on major social media platforms. Sent emails to 7,000 students at PKU to get feedbacks.

Result: Users report spending 5x less time to understand a paper. 100+ daily active users within weeks of launch, 180 upvotes on Reddit. 1800+ signups.

Demo video showing Paper Visualizer in action

Personalized Daily ArXiv

Purpose: Help researchers efficiently filter daily ArXiv publications to find relevant papers in their field.

Idea: Researchers spend 30-60 minutes every 2 days manually screening 100+ new papers - AI could transparently filter and recommend based on personal research interests with clear reasoning.

Work: Developed recommendation system using LLM-based filtering with transparent reasoning, and personalized user preference learning.

Result: $10 in subscription revenue from 2 paying users, 62 email signups, 4% outreach response rate

Demo video showing Personalized Daily ArXiv in action

Insight Generator

Idea: Something useful that came out from experimenting with extracting insights in a lossless way from long articles.

Work: Experimented with different LLM APIs, different prompt engineering techniques, and different ways of understanding the structure of knowledges.

Result: Launched in production in Heptabase in Sept. 2024. The learning and prompts from my experiments were migrated to production in Heptabase. In 2024 this was a novel concept. In 2025, especially after the integration of other more general AI capabilities in Heptabase, this became more or less obsoleted.

Loading post…

the launch of the insight generation feature

Select to Explain

Purpose: Help cross-domain researchers quickly understand unfamiliar concepts by selecting text and getting tailored explanations.

Idea: Cross-domain researchers struggle with technical jargon when reading papers outside their expertise - contextual explanations could bridge knowledge gaps without switching to Google or ChatGPT.

Work: Built Chrome extension; talked to users

Result: Product went viral on Threads with 90K views and 325 reposts, 33 users on Chrome Web Store with 8 power users (30+ uses in 7 days), but the problem isn't painful enough for users to pay -> pivoted

Demo video showing Select to Explain Chrome extension in action

Innline Annotation

Idea: Cross-domain learners constantly encounter unfamiliar terms requiring manual lookup - AI could automatically annotate based on user background to streamline learning.

Work: Built Chrome extension using GPT-4o-mini to detect and annotate technical terms with contextual explanations based on user expertise level.

Result: 26 users on Chrome Web Store, 70% annotation redundancy due to AI limitations, negative user growth post-launch

AI Learning Assistant

Idea: Cross-domain learners need a way to understand unfamiliar concepts quickly. Learning by analogy is a powerful way to understand new concepts.

Work: Built a chat-based learning assistant using Next.js, Tailwind CSS, and major LLM APIs.

Result: No meaningful user engagement because of the lack of clear user persona and value proposition.

Learning: Product failed due to lack of clear user persona and value proposition - taught importance of market validation before development.

Chat-based learning assistant interface

Reconstruct

Purpose: Help users visually compare similar ideas and extract insights from multiple texts using a visual whiteboard that cuts text and organizes content.

Idea: Extracting useful insights from different long-form content, including cutting, categorizing, formatting, and organizing notes takes hours - a visual, smart whiteboard could help users focus on understanding and asking questions rather than manual knowledge organization.

Work: Built visual whiteboard application for text analysis and comparison. Launched on multiple platforms.

Result: No user feedback received; learnt that for a product to be successful, it needs to have a very clear target persona to start iterating with. I still adore this idea tho!

Demo video showing Reconstruct visual whiteboard in action

Markdown File Query System

Purpose: Notion AI was launched, but I don't want to pay $10 a month so I built my own version.

Work: Built RAG system using Python, LangChain for text processing, OpenAI embeddings for semantic understanding, and Pinecone vector database for similarity search.

Result: 30 github stars. I learned a lot about RAG, LangChain, and Pinecone. It's my first AI-engineering project.

High School Physics Teacher

Purpose: Share effective study methodologies and advanced physics concepts with high school students -- As a substitue physics teacher (I was in my second year of college)

Work: Taught 6 hours across multiple sessions using presentation tools, interactive demonstrations, and the Cornell note-taking system.

Result: 90% of students think my teaching is very helpful. Several students said they finally understood the concepts that they didn't understand in the past 3 years.

Social Vegan

Match results showing compatibility analysis

Vector database operations and matching flow